The importance of data mining processes is rising for organizations, and this trend is not surprising. A 2020 survey by Harvard Business Review of 600 top executives revealed that 55% of organizations considered data analytics crucial for decision-making. Furthermore, 92% predicted that its importance would only grow over the next two years. Data mining, a high-powered technique, facilitates organizations in moving past barriers improving the processes of the company and enhancing revenue merely by looking at the hidden patterns and meanings in voluminous amounts of data. With such knowledge, a company can devise a new market penetration strategy, increase the efficiency of internal processes, raise customer loyalty, uncover fraudulent activity, enhance protection compliance, among others. These effects are seen in industries as diverse as healthcare, financial services and banking, insurance, retail, and even across the fields of science and engineering.

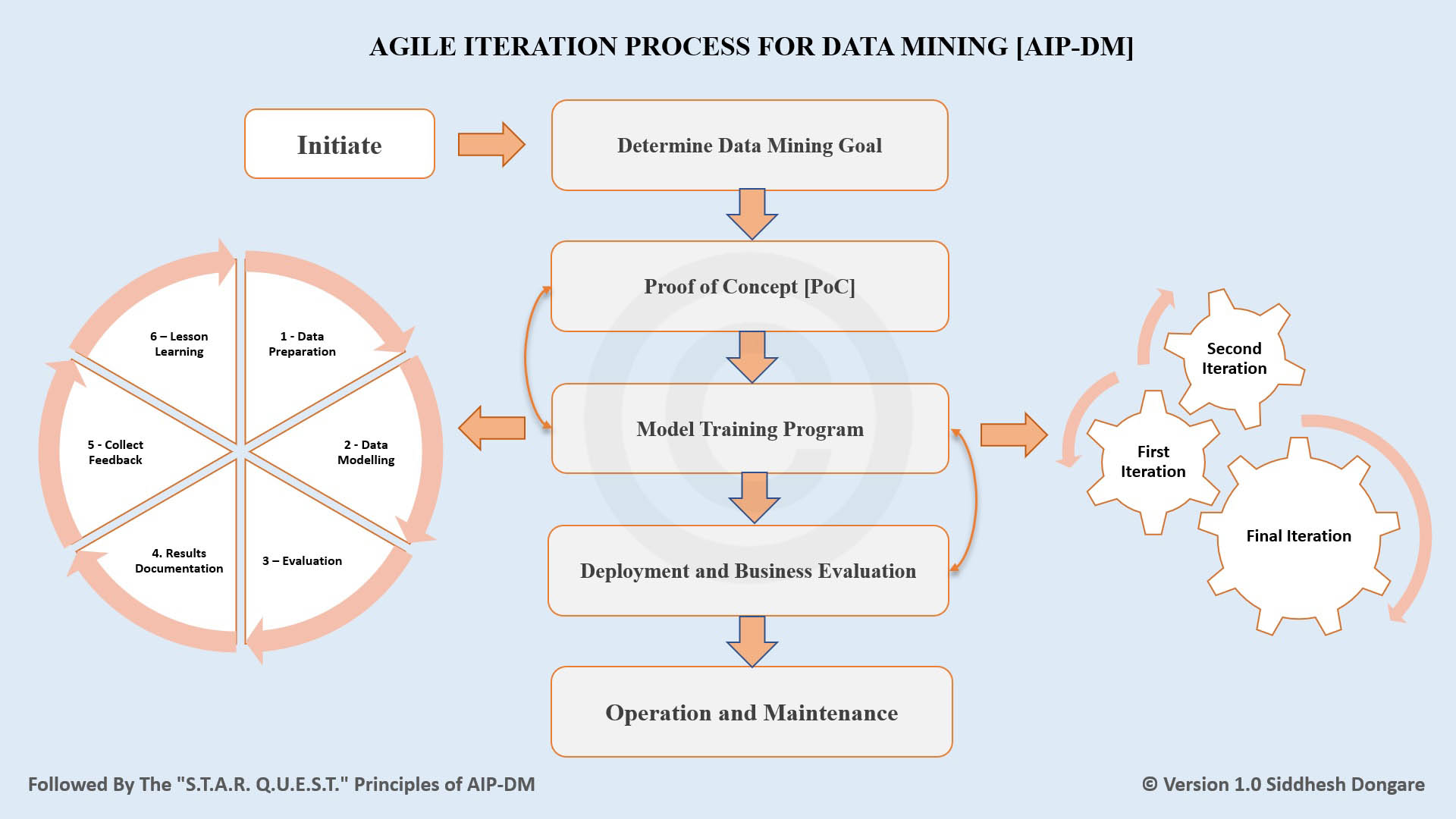

In today’s business environment, one with hyper competition and constant evolution, leaders around the globe now see agility as critical for success — particularly in data science where business needs, market conditions, and data sources are always changing. The Agile Iteration Process for Data Mining (AIP-DM) framework was developed so that data science projects can remain flexible, efficient, and in unison with business goals that shift over time. AIP-DM, designed and developed by me (Siddhesh Dongare) in 2022 in collaboration with the data science and analysis group at MasterCard's Open Banking division, emphasizes adaptability, collaboration, and efficiency, thus bridging the gap between traditional data mining approaches and the need for flexibility and agility.

The purpose of AIP-DM is to simplifies complexity, integrating agile practises, to enable data projects to deliver high quality actionable insights in the fast moving technological and competitive environment.

The Inspiration Behind

In 2022, I worked with a team of data scientists, analysts, and engineers. It was an exciting time because we were starting a new Open Banking API development project to enter new international markets and explore new opportunities. Given the novelty of the market, it was evident that an Agile methodology would be the most effective approach. This is because banks are adapting to the new demand, competitors are following suit, and regulations are still evolving and prone to volatility.

As a team, we needed to complete the work quickly while maintaining a high quality. However, we faced a problem: the team was concerned that Agile Scrum wasn’t the right fit for a data mining process. The data science team felt that the nature of data mining or data science is different from software development and pointed out key challenges:

- 1. The focus on processes could detract from scientific exploration.

- 2. Time-boxed sprints might limit the room for exploratory analysis.

- 3. A structured approach seemed to constrain the flexibility needed for innovation.

- 4. It was challenging to measure progress or demonstrate value early on.

- 5. The unpredictable nature of data work made sprint planning tricky.

- 6. The complexity of data mining necessitated intense focus without interruption.

After considering these concerns, I realised that Agile methodologies don't work the same way for every team. While Scrum is more organized and follows set processes, the team wanted to try something more creative, focused on continuous improvement, and leveraging Agile's adaptable, experimental nature. At this point, the problem became clear: we needed a data mining process model or data project management framework that followed Agile principles and values. There was a clear need to create a new or custom framework. To solve the problem, I decided to look outside the box. I wanted to create a custom process model combining agile principles with the flexibility and exploration needed in data mining. That’s how the AIP-DM framework became a reality. It helped us stay agile, adjust quickly to what we found, and maintain the scientific curiosity that’s essential in data mining.

Importantly, I applied the 80-20 rule, inspired by the Pareto Principle, to the AIP-DM framework. We focused 20% of our efforts on the process and 80% on fostering an agile way of working and cultivating a supportive culture. This approach helped the team find the right balance between having a clear guide and leaving enough space for creativity, flexibility, and new ideas. As a result, we have a process that fits the team’s needs and balances the creativity of data exploration with the speed and adaptability of Agile while delivering business value. It wasn’t just about getting the job done—it was about creating a working method to motivate the team to discover more, innovate faster, and deliver high-quality results!

That sounds interesting, right? Now let’s understand how to apply the AIP-DM framework in practice.

Agile Iteration Process for Data Mining (AIP-DM)

STEP 1: INITIATE

[OUTCOME: BUSINESS GOAL DEFINITION]

The AIP-DM process starts with a collaborative phase where stakeholders identify the project scope, data study criteria, and business objectives. This step, called Business Requirements Understanding and Defining Project Scope, focuses on defining the business goals and ensuring a deep understanding of customer needs, which is critical to the project’s success. In this phase, key stakeholders—such as subject matter experts, analysts, business stakeholders, the data science team, and the servant leader/project manager—convene to discuss project requirements, set expectations, and align on the overall direction.

The key activities include:

- 1. Defining the problem statement and setting clear business objectives.

- 2. Understanding customer needs and aligning them with business objectives and project goals.

- 3. Identifying the scope of data analysis and the criteria for data study.

- 4. Identifying key stakeholders to ensure alignment and shared understanding.

- 5. Forming a self-organised team and engaging stakeholders for smooth execution.

- 6. Ensuring model interpretability to facilitate transparent and explainable results.

This phase results in a well-defined business goal that clearly articulates the problem to solve, the objectives to achieve, and the metrics for success. Such an outcome is the foundation for the next steps in the AIP-DM process, guiding data preparation, model development, and subsequent iterations.

STEP 2: DETERMINE DATA MINING GOAL

[OUTCOME: DATA MINING GOAL DEFINITION]

To start any data project, a high-level business goal must first be translated into a clear and actionable data mining goal. This step involves determining the insights and outcomes the team wants to extract from the data in order to achieve the business objectives. Business and technology teams must collaborate closely to ensure that the data mining goals are both aligned with business needs and technically feasible.

Once the data mining goals are clearly defined, the team determines the data required to achieve these outcomes. Data collection is a critical next step since the type and quality of data collected significantly impact the success of the modeling techniques and the overall data mining process. Finally, in Step 3: Proof of Concept (POC), the data mining team applies initial modeling techniques on the collected data to validate whether the approach will be effective.

The key activities include:

- 1. Initial analysis and understanding of available data to ensure alignment with business objectives.

- 2. Identifying key questions or problems to be solved through data mining.

- 3. Defining the definition of done, interpretability metrics, and success criteria for evaluating the results.

Setting data mining goals after gaining data insight enables data-driven objectives, but active stakeholder engagement is essential to navigate data constraints and ensure project momentum.

STEP 3: PROOF OF CONCEPT

[OUTCOME: FINALISED MODELING TECHNIQUES]

Before moving into full-scale data mining, the team develops a Proof of Concept (POC) to validate the feasibility of different data mining techniques and ensure alignment with the project goals. A successful POC reduces the uncertainty and risks involved in implementing a new data science project, especially for stakeholders concerned about resource and time investment.

During the POC, the team experiments with initial modeling techniques (e.g., clustering, classification, regression), running small-scale tests to identify the most effective approaches for the specific problem. The primary goal is to assess the alignment between the selected techniques and the business objectives, confirming their feasibility before proceeding further. This phase is iterative, allowing the team to refine techniques based on initial results. Depending on what your project requires, it might be possible that this phase is much more advanced, for instance generative AI or deep learning or machine learning, if your project entails a large and complex database with high accuracy needed. With real data, these techniques will be used to validate the possibility of model to accomplish the business goals.

The key activities include:

- 1. Choosing initial modeling techniques based on the problem type.

- 2. Running tests on small samples of data to evaluate model performance.

- 3. Implementing initial interpretability techniques (e.g., SHAP, LIME) to assess how well stakeholders can understand model predictions.

- 4. Finalising the most appropriate techniques and determining any necessary data preprocessing or hyperparameter tuning strategies.

Finalising the modeling techniques ensures the right fit between the problem's requirements, data characteristics, and resource constraints. This step streamlines the next stages, ensuring efficient resource usage and paving the way for successful model deployment. Once the POC validates the best modeling techniques, the team can confidently move forward with full-scale model training. In this phase, the selected techniques are applied to the entire dataset, and the deep learning model undergoes rigorous training and evaluation to achieve the project goals.

Note: Refinements and alterations will be particularly noted in Step 3 and Step 4. Meaningful lessons from the results of each iteration allow the team to explore and improve modelling methods and/or preprocessing stages. This ensures that the model development process is kept agile and any new information and challenges that arise can be addressed by the team before proceeding to implement the model on a large scale. This give and take is particularly necessary in improving the accuracy of the model as well as meeting the project objectives. While this iterative process is beneficial, it is optional and not mandatory.

STEP 4: MODEL TRAINING PROGRAM

[OUTCOME: TRAINED MODEL AND EVALUATION RESULTS]

In data science, agile iteration is essential for extracting, visualising, and productising insights. This iterative focus on continual improvement encourages data scientists to collaborate with subject matter experts and stakeholders, enabling them to refine models more effectively.

This step involves several cycles of building, training, evaluating, and refining the data mining model. The training program is structured into three iterations: first, second, and final. After each iteration, the model is enhanced based on feedback and evaluation metrics. While two or more iterations can be performed to build a robust model, running at least two is crucial for ensuring the model is thoroughly trained and tested.

The iterative steps involved in the Model Training Program include:

- 1. Data Preparation: This step involves cleaning and transforming the data to make it suitable for modeling. It includes handling missing values, normalizing data, creating new features (feature engineering), and splitting the dataset into training and testing subsets. Proper data preparation and feature engineering are vital as they directly impact model accuracy and reliability.

- 2. Data Modeling: Building and training the chosen models using selected model techniques (ML/DL/Gen AI) occurs here. Adjustments are made to improve accuracy, including selecting appropriate architectures and hyperparameters. This step is where the core modeling takes place.

- 3. Evaluation: The model's performance is assessed using various metrics, such as accuracy, precision, recall, F1-score, and Mean squared error. Evaluation metrics provide insights into the model's strengths and weaknesses, guiding necessary refinements.

- 4.Results Documentation: Documenting outcomes, model parameters, and evaluation metrics is critical for transparency and reproducibility. This ensures that all team members and stakeholders understand the model's workings and can replicate results if needed.

- 5. Collect Feedback: Gathering feedback from stakeholders and subject matter experts is essential for refining the model. Their insights can lead to relevant feature additions, improvements in model applicability, and adjustments based on real-world use cases.

- 6. Lesson Learning (Retrospective): Reflecting on each iteration to learn from successes and challenges, applying those learnings to future iterations.

Repeat Step 1: It is recommended to improve the model through at least three rounds of iterations. New insights or challenges may require going back to earlier steps, like data preparation or modeling. This iterative process helps keep the project on track and allows for flexibility along the way.

The outcome is a trained model that has been evaluated for its effectiveness and has gone through 2-3 iterations to refine its accuracy and results.

Note: Each iteration in Step 4 refines the model, and if needed, feedback or learnings may lead to revisiting techniques from Step 3 to ensure the final model meets performance goals.

STEP 5: DEPLOYMENT AND TESTING

[OUTCOME: SUCCESSFUL DEPLOYED AND TESTED MODEL]

After successfully training the model through multiple iterations, the next essential step is deployment and testing. This phase is where the model transitions from a development environment into a production environment, allowing it to provide real-time insights or predictions that can drive business decisions.

Key tasks include:

- 1. Create a comprehensive plan on the way and location the model will be used. Find out: will the model be used as an API, integrated into some applications, or in batch processing mode. It must deploy with the provision of rapid scalability through a limiting facade that dynamically adjusts to these increasing demands on increasing dataset size, user base, and transaction frequency while sustaining a constant level of performance.

- 2. Execute the deployment of the model onto the chosen platform (e.g., cloud, on-premises, etc.), ensuring that all necessary dependencies and configurations are in place. This may involve working closely with IT and DevOps teams to ensure seamless integration.

- 3. Conducting tests to confirm the model’s accuracy and reliability.

- 4. Adjustments based on test results, focusing on improving interpretability.

A deployed and tested model undergoes thorough verification for accuracy and performance, thereby ensuring its readiness for operational use. This model should provide reliable predictions and insights, aligning with business objectives and user needs.

Note: The iterative process between Step 4 (Model Training Program) and Step 5 (Deployment and Testing) is another essential piece of the building of a solid and effective model. Once deployment and initial testing has taken place, feedback or outcomes of test or used models will often necessitate further work on the model improving the accuracy and effectiveness of its predictions. This could involve moving backwards to Step 4 because the model will need to be retrained, reworked or further developed based on what has been observed in practice. Thus, the perpetual cycle of training and deployment ensures that the progress of the model is totally in line with business expectations before it is implemented fully. While this back-and-forth approach can be quite useful for optimising the model, it is optional and not required, depending on the findings gained during testing.

STEP 6: OPERATION AND MAINTENANCE

[OUTCOME: MONITORED AND UPDATED MODEL]

After deployment, the focus shifts to maintaining the model's operational effectiveness. This step involves continuous monitoring and maintenance to ensure the model adapts to new data and evolving business requirements over time.

Key tasks include:

- 1. Real-time monitoring of the model’s performance to ensure it continues to meet business objectives.

- 2. Regularly updating the model with new data to keep it accurate and relevant.

- 3. Adding new patterns or features that help maintain consistent model performance.

- 4. Addressing any issues, bugs, or performance drops that arise during operation.

A monitored and updated model is one that continues to deliver valuable insights, remains relevant, and meets evolving business needs over time. By effectively managing operations and maintenance, organisations can ensure that their models continue to drive informed decision-making and support strategic objectives.

Summary

The AIP-DM (Agile Iteration Process for Data Mining) process model emphasizes continuous improvement through iterative cycles. The purpose of AIP-DM is to simplify complexity by integrating agile practices, enabling data projects to deliver high-quality, actionable insights in a fast-moving technological and competitive environment. Each step of the process ensures that data mining aligns with business goals, provides actionable insights, and delivers measurable results. By following this approach, organizations can effectively leverage their data to make informed decisions and drive success, ensuring that their data science initiatives remain impactful and sustainable over time.

Siddhesh Dongare

Inventor (AIP-DM & UnLeASH Agile Methodology) | Awarded Agility Coach (CAL-E® | CAL-T® | CAL-O® | PAL-EBM® | ICP-ENT®) | PMP® | PMI ACP® | Global Open Banking | Data Science (AI & ML) | Book Author