Let’s start with a fundamental truth: data science is about discovery, and discovery requires flexibility. With an agile mindset—and a commitment to learn, relearn, and unlearn—you can embrace the unknown, collaborate effectively, and grow at every step. So the next time your project takes an unexpected turn, remember this: Uncertainty isn’t the enemy. It’s an invitation.

With an agile mindset, you can turn any challenge into a chance to discover, innovate, and grow. Because at the end of the day, data science isn’t about having all the answers. It’s about being ready to find them—no matter where the journey takes you.

But let’s not forget an important piece of this puzzle. What made AIP-DM work wasn’t just the steps in the process—it was the people who made those steps a reality. Their agility, creativity, and commitment to constant improvement were the real drivers of success. So, here’s my message to you: If you’re leading a data project, don’t just focus on the tools or the framework. Focus on your team. Empower them. Give them a clear purpose, the freedom to innovate, and the courage to fail and learn from it. Because at the end of the day, it’s not the data or the algorithms that unlock transformative insights—it’s the people who work with them. And when those people adopt an agile mindset, they don’t just deliver results—they redefine what’s possible.

Now, let’s talk about Data Mining. Data mining is all about finding hidden patterns and valuable insights in large amounts of data. These insights help businesses make smarter decisions, improve their processes, and grow faster. But here’s the problem: businesses today face constant changes. Markets shift, customers’ needs evolve, and competitors introduce new ideas all the time. Traditional data mining methods aren’t flexible enough to keep up with these changes. That’s why I created AIP-DM.

Here’s why this is crucial. A survey by Harvard Business Review in 2020 found that over half of top executives think data is crucial for decision-making, and 92% believe its importance will grow. In industries like healthcare, banking, retail, and insurance, data mining helps detect fraud, make customers happier, meet legal requirements, and save money. But to stay competitive, businesses need tools that can change as quickly as the world around them. That’s where AIP-DM stands out. With AIP-DM, you’re not just keeping up in a fast-changing world—you’re staying ahead. It’s more than just a method; it’s a powerful approach to turning obstacles into opportunities and building a foundation for lasting success.

The goal of AIP-DM is to simplify complex processes by incorporating agile practices, allowing data projects to produce high-quality, actionable insights in today’s fast-paced and competitive environment. Let’s now explore how to apply the AIP-DM framework in real-world scenarios.

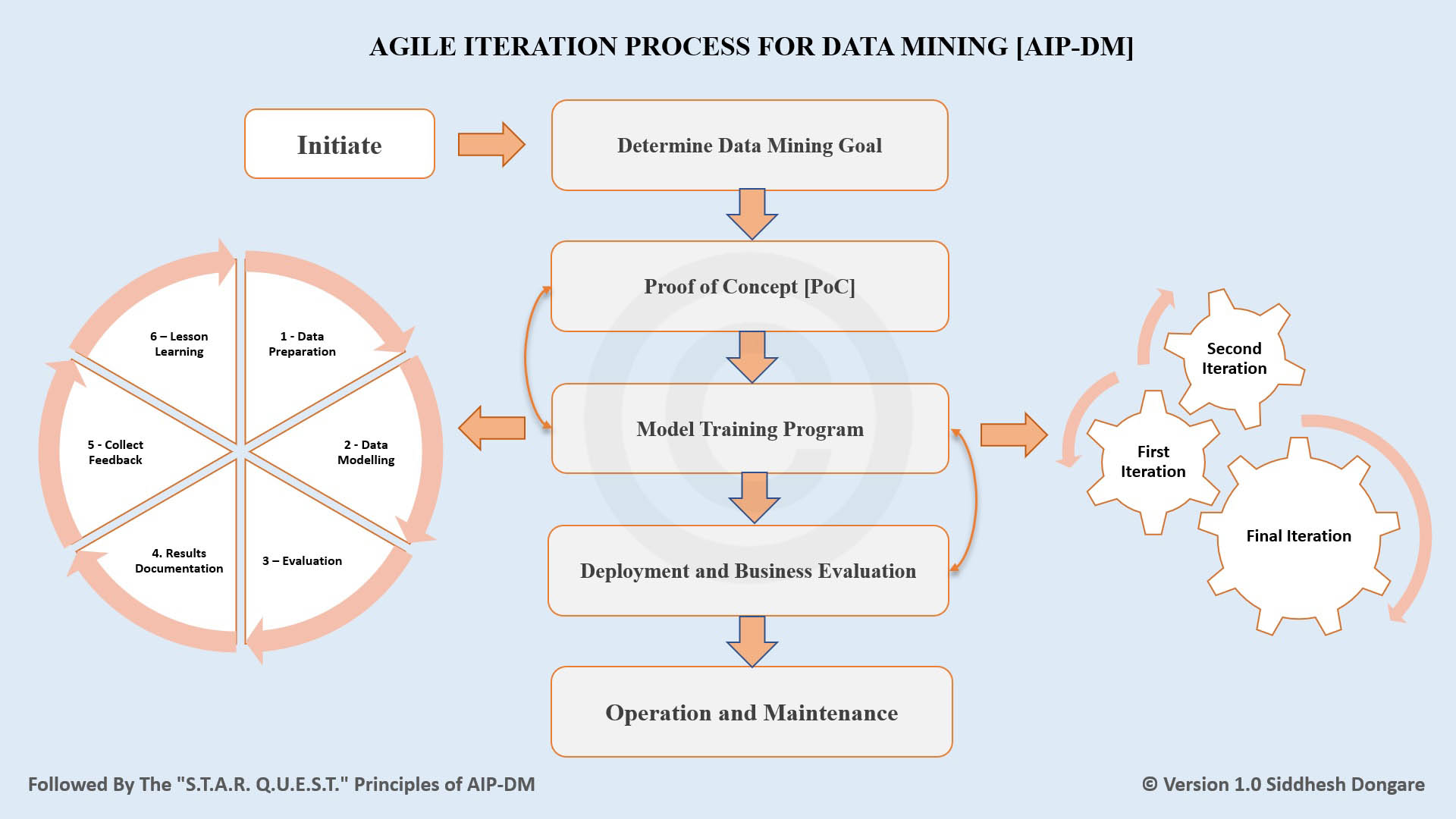

Agile Iteration Process for Data Mining (AIP-DM)

The Agile Iteration Process for Data Mining (AIP-DM) is a structured and adaptive methodology for managing data science, data mining, and analytics projects. It integrates agile principles with iterative cycles to ensure projects deliver actionable insights that align with business goals. Using the AIP-DM framework ensures that projects remain focused on business objectives, adapt to evolving requirements, and deliver measurable value through continuous refinement and stakeholder collaboration.

The "S.T.A.R. Q.U.E.S.T." Principles of AIP-DM

AIP-DM promotes an agile mindset and approach to data science, ensuring that projects are adaptable, collaborative, and focused on delivering value. Think of a team begins a journey guided by the principles of STAR (Stakeholders, Transparency, Adaptation, Rigor) to achieve the vision of QUEST (User-Centric Delivery Value through Quality and Ethical Solutions Together).

- S – Stakeholder Collaboration: Stay in sync! Keep stakeholders engaged and informed.

- T – Transparency and Accountability: Shine a light! Make decisions clear, share progress openly, and own every step to build trust and confidence.

- A – Adaptive Planning: Go with the flow! Be ready to pivot plans as new data, insights, or business needs emerge.

- R – Rigorous Quality Focus: Polish every gem! Maintain high standards from raw data to deployed models. Reliable inputs mean reliable outputs.

- Q – Quality Over Quick Fixes and Validation: Don’t rush the brush! Ensure every phase—data, models, and results—meets standards of excellence.

- U – User-Centric Value Delivery: Deliver delight! Make every iteration impactful by focusing on what truly matters to the business and its users.

- E – Ethical Considerations: Keep it fair! Handle data responsibly, explain models clearly, and meet the highest ethical standards.

- S – Sustainable Development: Pace the race! Balance workloads to keep the team energized and productive throughout the journey.

- T – Team Empowerment: Power up the team! Trust your team to make decisions, encourage innovation, and support them with the right tools.

Outline the Key Phases:

The AIP-DM framework is divided into six major steps, each designed to address specific objectives and deliverables:

STEP 1: INITIATE

Outcome: Lay the foundation for the project by clearly defining business goals, project requirements, and achieving team and stakeholder alignment.

Focus: Establish a clear understanding of the business context and objectives, ensure alignment among stakeholders, and set a structured direction for the data project initiative.

Key Activities:

- Define the Problem Statement and Objectives.

- Conduct Stakeholder Analysis.

- Define the Scope and Criteria for Analysis.

- Identifying key stakeholders to ensure alignment and shared understanding.

- Forming a self-organised team and engaging stakeholders for smooth execution.

- Defining Project Success Metrics and Feedback Channels for Continuous Improvement.

- Incorporate Risk Management Strategies to identify and mitigate potential issues early.

- Ethical Considerations and Ensure Model Interpretability to facilitate transparent and explainable results.

Results:

- A clear and actionable business problem statement and objectives.

- Clearly outlined project scope and criteria for analysis.

- Identified stakeholders and a cohesive, self-organized team.

- Success metrics and ethical guidelines for transparency and accountability.

- Stakeholder alignment with shared expectations and goals.

- Established feedback mechanisms for adaptive planning.

- A foundation ready for iterative and adaptive execution.

STEP 2: DETERMINE DATA MINING GOAL

Outcome: Translate business objectives into specific, measurable, and feasible data mining goals.

Focus: This step ensures that the technical aspects of the project are aligned with business objectives, identifies and evaluates data sources, and establishes criteria for success in data mining.

Key Activities:

- Translate Business Objectives into Data Mining Goals.

- Define Technical Goals and Success Criteria.

- Assess Data Sources and Conduct Data Quality Assessment.

- Validate Scope Against Feasibility.

- Select appropriate technologies and tools for data mining and model training.

- Engage Stakeholders and Formalize Feedback Channels.

Results:

- Specific and measurable data mining goals aligned with business needs.

- Defined technical success criteria and benchmarks.

- A comprehensive understanding of data quality and preprocessing needs.

- A feasibility assessment confirming the achievability of data mining goals.

- Technology and tool selections that are appropriate and scalable.

- Stakeholder validation and alignment on technical objectives, with a framework for ongoing engagement.

- Make necessary adjustments to the modeling techniques based on feedback and testing outcomes.

- Record the results, challenges, and lessons learned from the proof of concept to guide the next steps.

- Continuous governance and compliance monitoring, ensuring adherence to ethical and legal standards.

STEP 3: PROOF OF CONCEPT

Outcome: Finalize modeling techniques and validate the initial assumptions through a small-scale implementation to demonstrate the feasibility of the data mining solution.

Focus: This step is crucial for testing the viability of the proposed solutions before full-scale deployment. It aims to refine modeling techniques, test hypotheses, and evaluate preliminary results against set criteria to ensure alignment with business objectives.

Key Activities:

- Choose potential modeling techniques that align with the defined data mining goals.

- Create a scaled-down version of the model to test the concepts and approaches in a controlled environment.

- Run the prototype on a small, representative dataset to identify potential issues and ensure it meets the preliminary requirements.

- Assess the performance of the model against predefined success criteria to determine its effectiveness.

- Solicit input from key stakeholders to incorporate into the refinement of the model.

Results:

- Confirmed appropriate modeling techniques that are capable of achieving the data mining goals.

- A functioning prototype that demonstrates the potential of the full-scale model.

- Identified strengths and weaknesses in the approach, providing a clear direction for refinement.

- Adjustments made based on stakeholder insights, enhancing the model’s alignment with business needs.

- Detailed documentation of the proof of concept process, results, and decision-making rationale, supporting transparency and informed decision-making for proceeding to full-scale implementation.

- Acknowledge the possibility of reverting to this step from Step 4 if further refinement of the modeling techniques is needed due to unforeseen complexities or data characteristics. This step back ensures that the project adapts to new insights and maintains its relevance and effectiveness.

STEP 4: MODEL TRAINING PROGRAM

Outcome: Train, evaluate, and refine a robust model through multiple iterations to ensure it is fully optimized and ready for deployment.

Focus: This step emphasizes systematic and iterative training, testing, and refinement of the model using comprehensive datasets. The aim is to solidify the model's performance, reliability, and scalability to meet established success criteria.

Key Activities:

First Iteration:

- Data Preparation: Prepare and preprocess data for initial model training.

- Data Modeling: Train the model using selected techniques.

- Evaluation: Conduct initial evaluation to assess model performance.

- Result Documentation: Document results and performance metrics.

- Collect Feedback: Gather initial feedback from stakeholders on model performance.

- Lesson Learning: Identify and document key learnings for improvement.

Second Iteration:

- Data Preparation: Refine data preparation based on insights from the first iteration.

- Data Modeling: Re-train the model incorporating adjustments.

- Evaluation: Re-evaluate the model to track improvements and resolve any previous issues.

- Result Documentation: Update documentation to reflect changes and second iteration results.

- Collect Feedback: Obtain further feedback to ensure the model meets stakeholder expectations.

- Lesson Learning: Document additional learnings and prepare for final adjustments.

Final Iteration:

- Data Preparation: Make final adjustments to data preparation for optimal training results.

- Data Modeling: Apply final refinements to the model training process.

- Evaluation: Conduct a thorough final evaluation to ensure all criteria are met.

- Result Documentation: Complete comprehensive documentation of the model's performance and readiness.

- Collect Feedback: Gather final stakeholder feedback to confirm the model's readiness for deployment.

- Lesson Learning: Finalize learnings and document the model’s development journey.

Results:

- A fully trained and refined model ready for real-world application.

- Comprehensive documentation of performance across all iterations, demonstrating the model’s effectiveness and efficiency.

- In-depth validation results confirm the model's accuracy, reliability, and scalability.

- Finalized settings of optimized parameters enhancing the model's performance.

- Complete endorsement from stakeholders based on the model’s performance and iterative improvements.

- The model is fully prepared and validated for deployment, backed by extensive documentation and stakeholder feedback.

- The model training program recognizes that the number of necessary iterations may change based on evolving project requirements, stakeholder feedback, and new insights from data analysis. This approach ensures that the project can dynamically adapt to new information and align with stakeholder expectations throughout the development process.

- A detailed record of all iterations, feedback incorporated, and lessons learned, supporting transparency and accountability.

STEP 5: DEPLOYMENT AND BUSINESS EVALUATION

Outcome: Ensure that the model is fully integrated, tested, and evaluated by business users to confirm it meets operational requirements and delivers the expected business value.

Focus: This step emphasizes the practical implementation of the model within the business environment and evaluates its performance through user acceptance tests. It aims to verify that the model satisfies all business requirements and is ready for full-scale use.

Key Activities:

- Finalize all technical and business integration plans. Ensure the production environment and support systems are ready for deployment.

- Roll out the model into the production environment, ensuring it integrates smoothly with existing business processes and systems.

- Conduct thorough testing with end-users to validate the model’s functionality, usability, and performance in real business scenarios. Focus on how well the model meets the business objectives and delivers value.

- Collect detailed feedback from users during UAT to identify any issues, gather suggestions for improvements, and understand the model's impact on business operations.

- Assess the model’s effectiveness in achieving the intended business outcomes. Evaluate the business impact against the predefined success metrics.

- Prepare comprehensive documentation and reports detailing the deployment process, UAT findings, user feedback, and business evaluation results.

- Make any necessary adjustments to the model based on feedback and evaluation outcomes to enhance performance and user satisfaction.

- Conduct a final project review to summarize findings, lessons learned, and confirm project completion. Plan for post-deployment support and ongoing maintenance.

Results:

- The model is fully operational in the production environment with all technical issues resolved.

- UAT confirms that the model meets or exceeds all business expectations, delivering tangible benefits.

- Feedback from business users confirms the model's usability and effectiveness in real-world conditions.

- Complete and detailed documentation of the deployment process, business evaluation, and user acceptance, ensuring transparency and accountability.

- Insights from user feedback and business evaluation guide further refinements, enhancing the model’s relevance and performance.

- Based on the feedback and the issues identified, the project team may return to the Model Training Program (Step 4) to refine the model, adjust parameters, or even retrain it with new data or techniques to better meet the requirements.

- A clear closure of the project with documented success, and planned strategies for long-term model maintenance and enhancement.

STEP 6: OPERATION AND MAINTENANCE

Outcome: Ensure the deployed model remains effective, up-to-date, and compliant with governance standards through continuous monitoring, updates, maintenance, and strict governance protocols.

Focus: This step focuses on maintaining operational excellence and compliance of the model through stringent governance practices alongside ongoing performance management. It ensures that the model operates within regulatory requirements and ethical standards, and remains aligned with strategic business objectives.

Key Activities:

- Implement monitoring tools that also track compliance with governance standards. Monitor model performance, decision processes, and adherence to ethical guidelines.

- Schedule regular updates and perform routine audits of the model to ensure it complies with current laws and industry standards. Retrain the model with new data under governed protocols.

- Regularly evaluate the model not just against business outcomes and performance metrics, but also for compliance with governance frameworks. Adjust governance policies as needed.

- Maintain transparency by regularly reporting to stakeholders about the model’s performance and governance. Include details on compliance, decision making, and control mechanisms in reports.

- Develop a robust process for handling governance issues detected during operations. Include a rapid response plan for ethical concerns or compliance breaches.

- Keep all documentation, including governance policies and procedures, up-to-date with changes to the model. Document all governance-related decisions and actions.

- Provide ongoing training focused on governance and compliance, ensuring that all team members understand their roles in upholding standards and are aware of the latest regulatory requirements.

- Plan for the long-term sustainability of the model with a focus on governance. Consider future legal and ethical challenges and prepare to address them.

Results:

- The model consistently meets compliance and performance standards, maintaining its credibility and legality.

- Model governance frameworks are strictly followed, ensuring decisions are transparent, auditable, and aligned with ethical standards.

- Rapid identification and resolution of any governance or compliance issues minimize risk and uphold organizational integrity.

- Regular updates and transparent communication keep stakeholders informed about governance status and model performance.

- Improvements based on governance reviews and audits enhance the model's strategic alignment and operational confidence.

- Detailed records of governance actions, updates, and compliance checks provide a clear audit trail.

- Ongoing governance planning ensures the model remains viable and compliant amid evolving regulations and business landscapes.

By choosing the AIP-DM framework, you use a strong and reliable method that is built on important principles. These principles help improve the quality of your work, make your project flexible, and ensure that you follow ethical standards in your data science projects. This method not only makes your projects more successful but also creates a work environment where everyone works well together and shares information openly.

When you use AIP-DM, your team can communicate better, stay organized, and handle changes smoothly. This leads to better results and a more positive and trustworthy workplace. In simple terms, AIP-DM helps you do your data science projects effectively by focusing on good practices and teamwork. It ensures that your projects are high quality, can adapt to new challenges, and are done in a fair and transparent way. This makes your projects more likely to succeed and your team happier and more productive.

Siddhesh Dongare

Inventor (AIP-DM & UnLeASH Agile Methodology) | Awarded Agility Coach (CAL-E® | CAL-T® | CAL-O® | PAL-EBM® | ICP-ENT®) | PMI PMP® | PMI ACP® | Product Owner - Data Science (AI & ML) & Engineering | Book Author